AI Madness round 1: ChatGPT vs Perplexity

In the first round of AI Madness we are going head-to-head with ChatGPT-4o vs Perplexity AI.

OpenAI’s ChatGPT is known for consistently delivering reliable, up-to-date information, often matching or surpassing competitors. Providing nuanced answers that account for subtle variations in user queries, the chatbot responds with a natural, conversational style, often indistinguishable from human-created text.

The chatbot also excels in multimodal and creative capabilities but struggles with hyperspecificity and reasoning depending on context and complexity.

Perplexity AI is an AI-powered search engine that combines natural language processing with real-time web access to deliver conversational and informative responses. It interprets user queries in context, providing personalized search results accompanied by concise summaries and inline citations. Similar to ChatGPT in output, Perplexity does struggle more often with hallucinations and redundant and repetitive responses.

In evaluating ChatGPT versus Perplexity, I tested both AI platforms across five specific criteria to determine their strengths and weaknesses. Here’s a breakdown of how they performed and the ultimate winner.

1. Accuracy & factuality

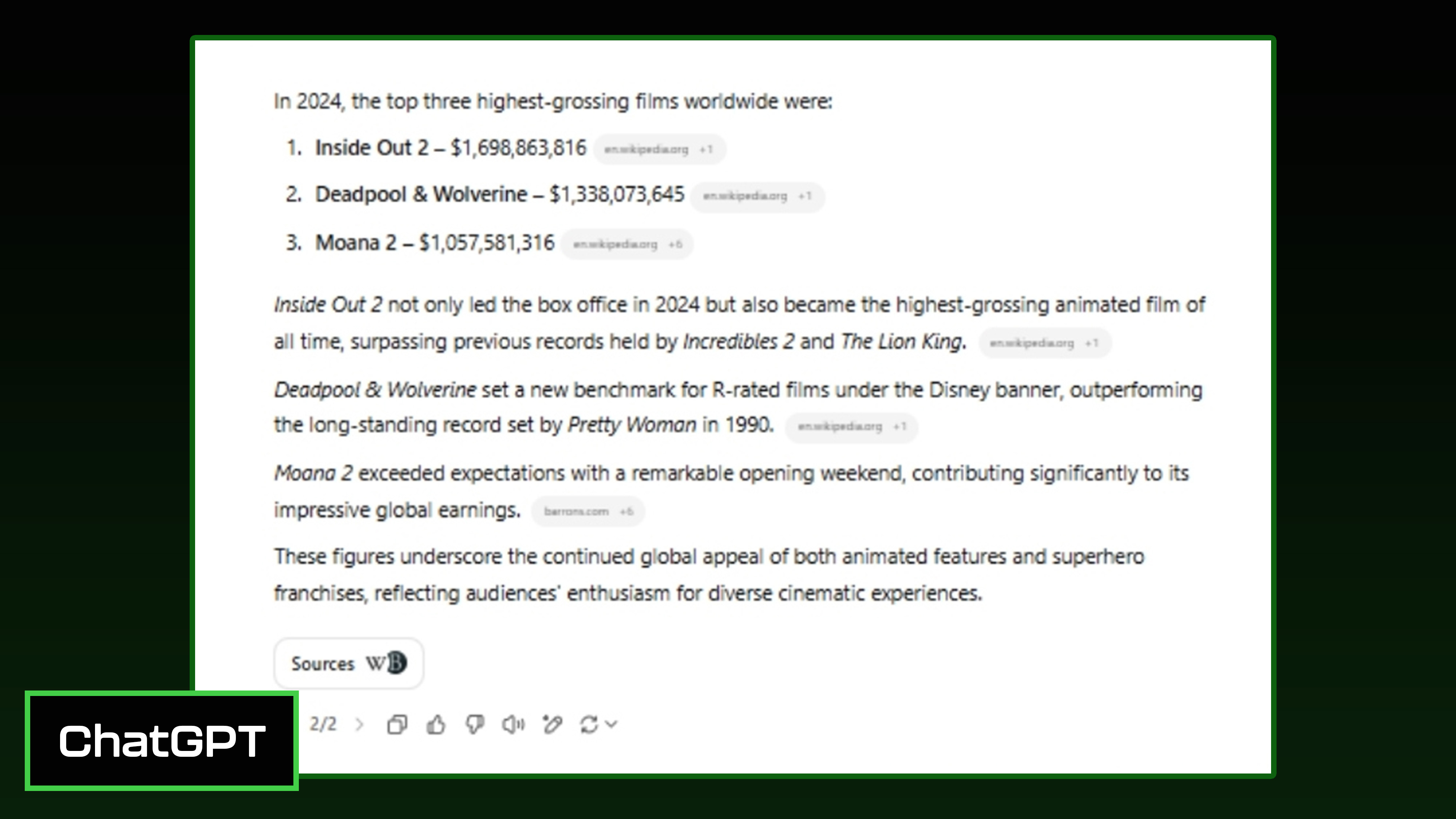

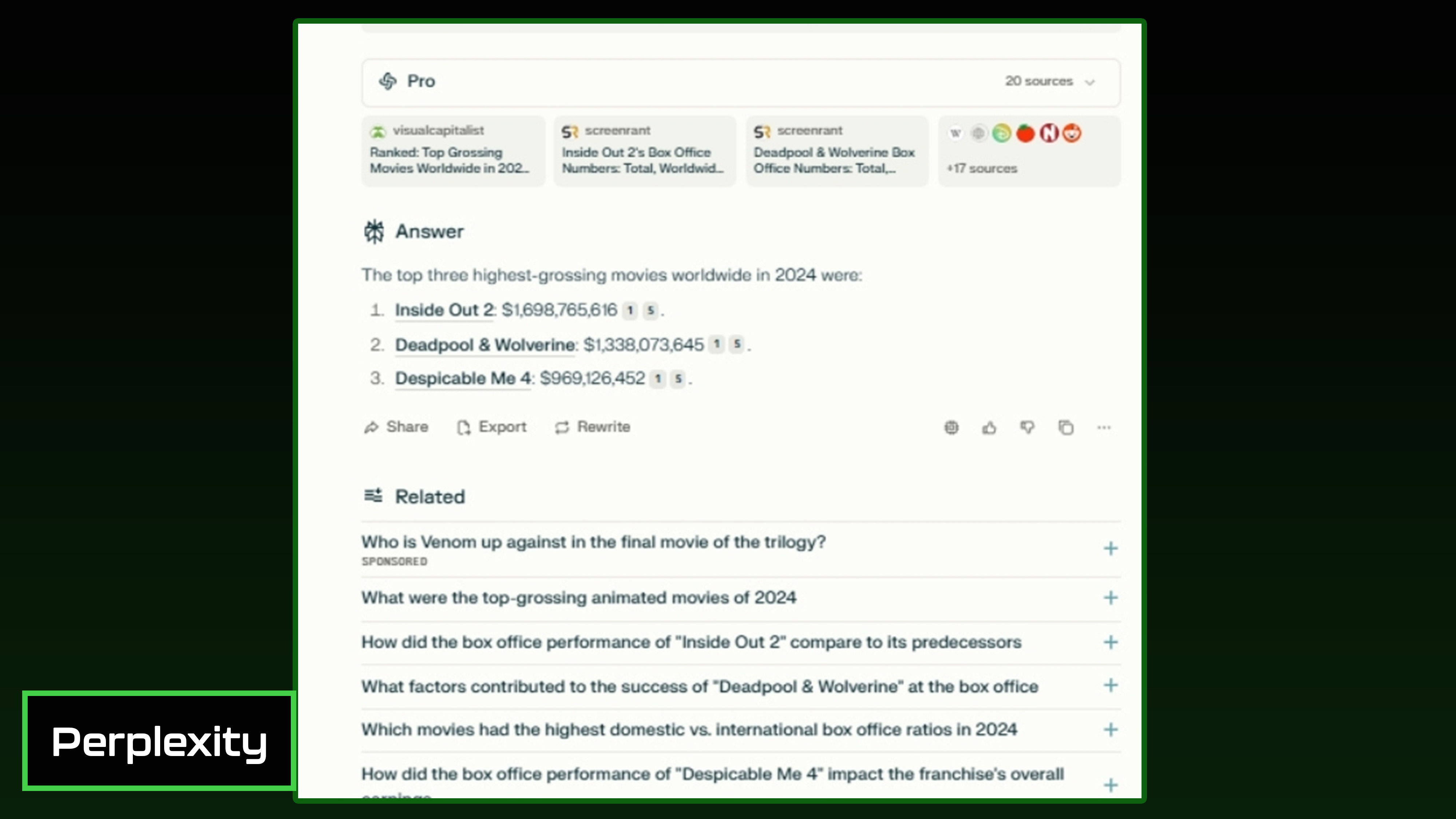

Prompt: “What were the top three highest-grossing movies worldwide in 2024, and how much did each earn?”

ChatGPT accurately answered the prompt by listing the three highest-grossing movies worldwide including, “Moana 2,” which made significantly more than “Despicable Me 4,” which Perplexity listed as #3.

Perplexity simply answered the question wrong, which automatically makes it fail this round. The inclusion of potentially related queries is informative, but diverts from the main question.

ChatGPT wins for a detailed and accurate response.

2. Creativity & natural language

Prompt: “Create a whimsical conversation between a coffee mug and a smartphone, arguing about which one is more essential in daily life.”

ChatGPT came up with a playful and dynamic dialogue between the mug and smartphone, giving them distinct voices and witty comebacks. The back-and-forth, lively conversation between them seems natural and is fun to read. Additionally, the use of stage directions made the story come to life.

Perplexity delivered a story that feels slightly more serious and formal. It lacks the creative and lighthearted banter that ChatGPT does so well. Instead of snappy comebacks, the mug and phone deliver monologues, making the conversation feel less like an argument and more like two characters stating their beliefs. The ending was also weaker, lacking a punchline.

ChatGPT wins for its witty, engaging, and well-structured dialogue. It feels more like a conversation between two quirky personalities rather than a debate between abstract concepts.

3. Efficiency & reasoning

Prompt: “A couple needs to choose between buying an electric car or a traditional gasoline car. List key factors they should consider and briefly explain the reasoning behind each one.”

ChatGPT delivered a clear and digestible response that better explains important factors while also being easy to skim. It brings up several important points including a stronger final decision and summary.

Perplexity repeated concepts in a few sections yet lacked a clear side-by-side comparison. Perplexity formatted the response in a dense paragraph, which made it harder to skim. And while Perplexity lists important factors, it doesn’t summarize or weigh them.

ChatGPT wins for presenting the key factors in a more structured, balanced, and decision-oriented way. While Perplexity provides accurate information, ChatGPT’s organization, clarity, and user-friendliness make it the better response.

4. Usefulness & depth

Prompt: “Provide detailed instructions on how to safely back up and secure personal digital files, including the best tools, recommended practices, and common mistakes to avoid.”

ChatGPT broke down the process with steps that are clear and easy to follow. The sections are well-defined, separating them for easy instruction. The use of bullet points is helpful and emojis add a user-friendly casualness. The common mistakes section is useful as are the recommendations.

Perplexity lacked actionable instructions and was too dense and less engaging. The formatting feels bulky, making it harder to skim and digest. The lack of headers and too many inline citations made it feel far more complex and like a research summary.

ChatGPT wins for a highly actionable and engaging guide that is user-friendly and clear.

5. Context understanding

Prompt: “Create a storyboard outline describing each frame of a short animated sequence featuring a friendly dragon teaching kids about recycling.”

ChatGPT’s version feels like a true animated sequence with a clear beginning, middle, and end. The dialogue is natural and friendly, making it feel more engaging for young audiences.

Perplexity structured the story more like an instructional manual rather than an engaging animated sequence. It was not as fun or kid-friendly as ChatGPT’s version. The kids in Perplexity’s version let Draco do all the work and the dragon doesn’t guide them like in ChatGPT’s version.

ChatGPT wins for a true animated short, engages kids better, and makes learning fun with magic and interaction. Perplexity AI’s response is informative but lacks storytelling depth and excitement.

Overall winner: ChatGPT

While both ChatGPT and Perplexity demonstrated competence across the tested categories, ChatGPT emerged as the stronger choice overall, particularly excelling in creativity, depth, and a user-friendly experience.

Perplexity offered factual accuracy after fumbling in the first round, but ChatGPT consistently provided richer, more engaging responses tailored effectively for an overall better experience.

More from Tom’s Guide

Source link